Jun 2021- Part one of six parts

Big data has been growing as topic for a while now and it is obvious that data is powerful. Data is indeed the new oil. Any business out there is investing in data research. There are many terms nowadays that describe data and how it is organized. A data lake is one of them. So, what is it?

In simple words a Data Lake is a centralized repository that collects, stores and organizes huge data collection, including structured and semi-structured data. It also allows multiple organizational units (OU) to explore and investigate their current business stage in minutes. It provides users with the availability to do ad-hoc analysis over diverse processing engines like serverless, in-memory processing, queries and batches.

The challenge

In these series of blogs I will explain how I translated MVP core services for a large e-commerce company into Infrastructure-as-Code (IAC) using Terraform scripts to allow for fast and repeatable deployments, efficient testing and to decrease recovery time in case of an unplanned event. This Data Lake architecture version-one use the following services:

Each of these services are a huge topic in their own ecosystem so throughout this article I will highlight information about how they work and how I integrated them.

Diagram version 1: Data lake

Diagram final version: Data lake

What method will we be using to deploy this infrastructure?

We will be deploying this infrastructure as a code (IaC) using Terraform.

Amazon Elastic Computer Cloud (EC2)

EC2 is the backbone of this infrastructure as it is dedicated to holding the e-commerce large data logs during the time of business analysis. Also, it provides you with a resizable compute capacity for this environment. You can kick up a new server optimized for your work in minutes and rapidly scale it up or down as your computing requirements change.

Amazon Kinesis

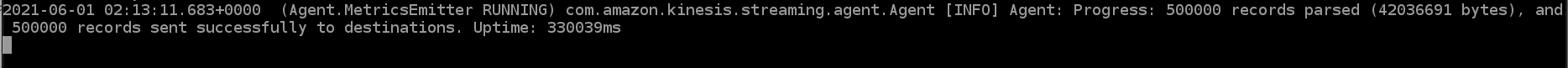

Kinesis plays a double part within this infrastructure. Firstly, the Kinesis Firehose stream allows you to capture data from a server log being generated on our Amazon EC2 instance and distributes that into your data lake landing zone in Amazon your S3 bucket. The second one uses the Amazon Kinesis agent application in order to publish data (“direct put”) into this Amazon Kinesis firehose using the Amazon Kinesis agent.

Kinesis agent sample:

A powerful mechanism that Kinesis possesses is the availability to configure how to store your data into s3. You can configure based on buffer size and buffer interval. For the purpose of this project I have decided to select 5 megabytes of a buffer size meaning that incoming data from the firehose will be dividing the files in five megabytes in size. And, for the buffer interval I set it to the lowest value which is 60 seconds. Tips to remember Kinesis firehose is “almost real-time” and cannot go lower than that.

Amazon Simple Storage Sever (S3)

S3 is the biggest and most performant data lake storage solution because of its cost-effective, secure data storage with 11 9s of durability and its virtually unlimited scalability model. It makes sense to store your vast data logs in S3, Don’t you think?

The goal for individuals or businesses to use this data lake solution would be to build and integrate Amazon S3 with Amazon Kinesis, Amazon Athena, Amazon Redshift Spectrum, and AWS Glue for data scientists or engineers to query and process a large amount data.

S3 data stream logs sample:

Important to note that this infrastructure is not fully develop I will be adding other servers such as AWS Glue, AWS Athena, AWS Redshift, AWS Cloudwatch and QuickSight 😊 please stay tune.

Functions, arguments and expressions of Terraform that were used in the above project:

Find the Terraform repo and directions for this project here

I would like to give a big shout out to my mentor Derek Morgan. Thank you for all of your support all these months and for the amazing course "More Than Certified in Terraform" the best course out there. Link to the course here. If you want to connect with him and ask questions about his course, contact him via LinkedIn Derek Morgan or you can join the TechStudySlack channel here.